My last blog post was about sidechains and how SPV proofs and reorg proofs were the key ingredients for transferring funds from one chain to another. In this post I will talk about "compact SPV proofs". As usual, I'm writing to educate myself, and it's my hope that it'll be useful for others too. I'm posting a link to this post on reddit (r/bitcoin) and I hope we can get a discussion there about errors and improvements you find in this post.

The problem

A plain SPV proof will most often not be small enough to fit in a transaction, because a scriptSig is not allowed to be bigger than 10000 bytes according my understanding of the code. An SPV proof of a day's work would weigh about 144*80 = 11520 bytes. Add to that the size of the transaction containing the output and a merkle path and you're well above 12000 bytes. There's also a rule that says no push must be larger than 520 bytes. This limit must definitely be lifted if we're ever going to push an SPV proof, compact or not.

Compact SPV proofs

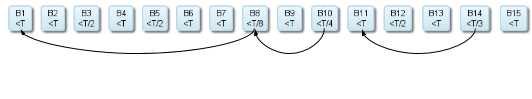

The sidechains paper describes "Compact SPV proofs", which is a probabilistic way to prove work. Instead of providing all blocks from A to B, you only provide the "lucky blocks". A lucky block is a block whose header hash is much less than the current difficulty target, T. It could be for example <T/2 or <T/7. Because hash values are evenly distributed across the the 256 bit numbers, half of the blocks between A and B should statistically have hashes less than T/2. Likewise, an eighth of the blocks should statistically have a hash less than T/8. So instead of including all block headers, we can use a few lucky blocks to prove that it's probable enough that the required work has been done. Beginning from block B15 in the picture below, we select B15, B14, B11, B10, B8 and B1. That's 6 block headers instead of 15:

In the paper they say that the expected number of blocks to include is log2(number of blocks). So to prove a chain of 1024 blocks you would only need about 10 block headers.

Great! But we cannot use this as a proof yet, because there's nothing proving that B8 commits to B1, or that B10 commits to B8, and so on. In a plain SPV proof we can follow the "previous block hash" in the block headers, but since we are now skipping blocks, there's no way to connect the 6 blocks. The sidechains paper suggest that we let each block commit to a merkle tree of all block hashes, pretty much in the same way that a block commits to all its transactions. This merkle root could be stored in some special location, for example in the coinbase transaction. Using this merkle tree we can now create a proof that connects the 6 blocks:

The proof would consist of

| Block header | Partial merkle path | Comment |

|---|---|---|

| B15 | right:=, left:s, left:k, left:g, left:e, left:c, left:a | This merkle path connects B15 to the merkle root. The '=' means that hash of B15 is duplicated to satisfy the merkle tree. |

| B14 | - | We don't need a merkle path here because B15 have an old fashioned link to B14 in its block header. |

| B11 | right:B12, left:q | Connects B11 with B15. The hash(q|r) will produce k which is part of the merkle path of B15 above. |

| B10 | - | Previous block hash in the B11 header is used, as in B14. |

| B8 | left:B7, left:o, left:i | Here we need B7, o, and i to be able to produce g wich connects us to the path of B15. |

| B1 | right:B2, right:n | B2 and n lets us create hash i which we can connect to the merkle path of B8. |

As you can see, the partial merkle paths gets connected to each other and thus it's proven that B15 commits to B14, B11, B10, B8 and B1.

Attack is easy

There is a problem here: The probability of finding lucky blocks early is not negligible. The paper uses an example of an attacker having 10% of the total hashpower. The attacker is trying to produce a fraudulent chain from which he creates a compact SPV proof to fool the receiving chain into accepting his output that doesn't exist on the "honest" chain. Remember that he doesn't compete with the honest chain in the traditional sense, but he's trying to produce lucky blocks so that he can create a compact SPV proof faster than the actors on the honest chain can produce 900 blocks. So 90% of the network is working on the honest chain and 10% is working on the fraudulent "chain". Let's say that the attacker needs to prove 900 blocks worth of work in order to transfer funds. 10% of the the hashpower should make it possible to produce about 100 blocks in the time it takes the honest network to produce 900 blocks. The probability that he finds a lucky block with hash <T/900 is 1 minus the probability that he don't:

\[ 1-(\frac{899}{900})^{100}\approx 0.105\]

He could also try to find two lucky blocks (LB) with hash <T/450 with the probability

\[ 1-P(no\ LB)-P(one\ LB)=1-(\frac{449}{450})^{100}-\frac{1}{450}(\frac{449}{450})^{99} \approx 0.20\]

Or why not N lucky blocks with hash <NT/900 with the probability

\[ 1-\sum_{n=0}^{N-1}(\frac{N}{900})^{n}(\frac{900-N}{900})^{100-n}\]

| N | Lucky block threshold | Probability of success |

|---|---|---|

| 1 | T/900 | 0.11 |

| 2 | 2T/900 | 0.20 |

| 3 | 3T/900 | 0.28 |

| 4 | 4T/900 | 0.36 |

| 5 | 5T/900 | 0.42 |

| 6 | 6T/900 | 0.48 |

| 10 | 10T/900 | 0.67 |

Now how would a fraudulent proof look if we use N=3? The attacker must produce 4 blocks, one with the output and 3 lucky blocks. But for the proof to be valid he needs 299 fake block hashes in between the revealed blocks. This makes it harder for the attacker, since he must decide on N before he starts mining for lucky blocks. The fake blocks can be any number less than T.

Mitigation

The success probabilities in the table above are quite depressing. To make it harder to pull off such an attack, the paper proposes a few approaches with limiting the maximum skip size (N), either statically, or dynamically. This would bring the proof to a linear growth or a slightly sublinear growth, respectively.

It also suggest requiring that some random (seeded by the proof) header being revealed.

We could add a rule for lucky blocks saying "The block header at X (0 < X < skiplength) blocks back must also be revealed along with a partial merkle tree for it". X could be derived from some predefined sequence of bits from the lucky block hash. In the example above, X must be greater than 0 and less then 300. An honest prover have no problems with this, since he's got all blocks readily available, but an attacker using fake blocks does not, and he'll have to throw away his lucky block and start over. This pushes the attacker into using bigger N (higher number of lucky blocks, but less lucky) and creating some, but not all, real blocks in between the lucky blocks. This increases the proof by a constant factor.

Another, more space-efficient, way would be to have the last block hash seed the selection of one or a few random non-revealed blocks. That would increase the proof size by a constant (not a constant factor) while still making proofs with lots of fake non-revealed blocks very risky for the attacker. Let Q be the number of required blocks to reveal and R be the number of real blocks added (apart from the lucky blocks). The success probability would, assuming R>=Q, become:

\[ (1-\displaystyle \sum_{n=0}^{N-1}(\frac{N}{900})^{n}(\frac{900-N}{900})^{100-R-n})\prod_{k=0}^{Q-1}\frac{R-k}{900-N-k}\]

The original formula is not very affected by this; it just changes the exponent 100-n to 100-R-n which makes finding the lucky blocks a little bit harder. The big thing is the new big fat factor at the end. The table below shows the factor for different combinations of R and Q for N=3:

| Q | R=1 | R=2 | R=4 | R=8 | R=16 |

| 1 | 0.0011 | 0.0022 | 0.0045 | 0.0089 | 0.018 |

| 2 | 2.5E-006 | 1.5E-005 | 7.0E-005 | 0.00030 | |

| 4 | 3.7E-011 | 2.6E-009 | 6.8E-008 | ||

| 8 | 9.9E-020 | 1.3E-015 | |||

| 16 | 1.4E-034 |

A big hazard for the attacker here is that he doesn't know in advance what blocks to reveal, he must perform the whole sequence of lucky blocks, and then when he's finished, he gets to know what Q blocks to reveal.

How to validate reorg proofs

It's important that reorg proofs are compared against the required amount of work in the confirmation period, and not against the actual compact SPV proof. If an attacker is really lucky and finds a proof exceeding both the confirmation period and contest period, then there would be no way to stop him if reorg proofs are compared against this very lucky proof.

Let's assume that the attacker produces a fake proof when the honest chain has just produced 700 of 900 blocks and that the contest period is 1000 blocks. Then, the honest chain has the time corresponding to 1000 blocks to produce merely 201 blocks and then someone can create a reorg proof to invalidate the fake SPV proof.

Denial-of-service attack

A gangster may want to DOS someone by producing fake reorg proofs for someone else's SPV locked output. He only need to provide proof of more work than the confirmation period, and he's got the confirmation period PLUS the contest period to produce a fake reorg proof. To reduce this risk, the contest period should not be substantially longer than the confirmation period.

Summary

I've described how compact SPV proofs can be made with log2(n) block headers. But this opens up the possibility for attackers to pretty easily create fake proofs. A typical fake proof can be pulled off with a probability of >0.1. To mitigate this we can require the proof to reveal some other block headers. Those block headers are selected by the last block in the proof. This reduces the risk of fake proof success by several orders of magnitude, depending on how many blocks must be revealed.